![SOLVED: Example 6: Find the stationary distribution of Markov chain in Example 4. 0.6 0. 0.5 03 0.2 0.4 0.4 0.2 Solution: Let V stationary distribution = [Vi Vz Vs] Fo.6 033 SOLVED: Example 6: Find the stationary distribution of Markov chain in Example 4. 0.6 0. 0.5 03 0.2 0.4 0.4 0.2 Solution: Let V stationary distribution = [Vi Vz Vs] Fo.6 033](https://cdn.numerade.com/ask_images/6ec5b37fecc54d94bfa846d2628c51dd.jpg)

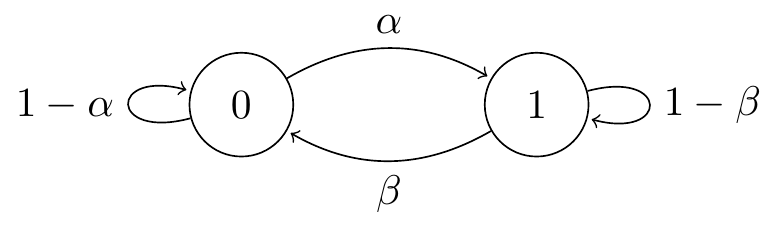

SOLVED: Example 6: Find the stationary distribution of Markov chain in Example 4. 0.6 0. 0.5 03 0.2 0.4 0.4 0.2 Solution: Let V stationary distribution = [Vi Vz Vs] Fo.6 033

probability - What is the significance of the stationary distribution of a markov chain given it's initial state? - Stack Overflow

stochastic processes - Proof of the existence of a unique stationary distribution in a finite irreducible Markov chain. - Mathematics Stack Exchange

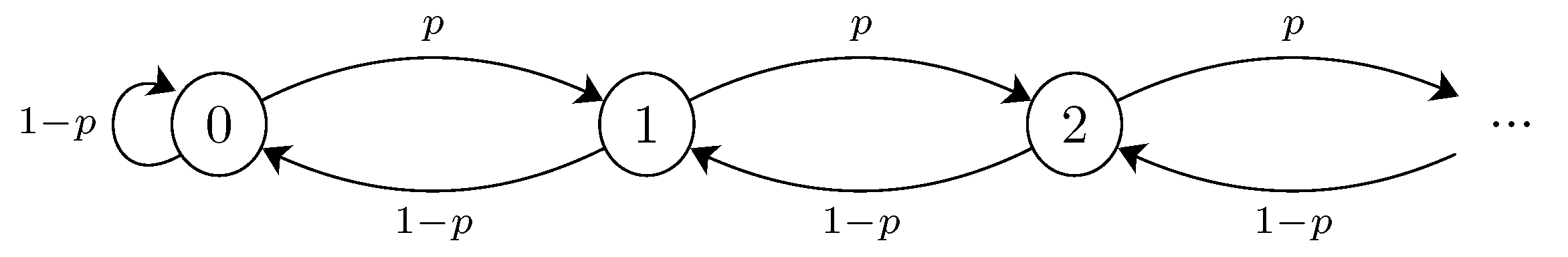

SOLVED: A stationary distribution of a Markov chain is a probability distribution that remains unchanged in the Markov chain as time progresses. Typically, it is represented as a row vector T whose

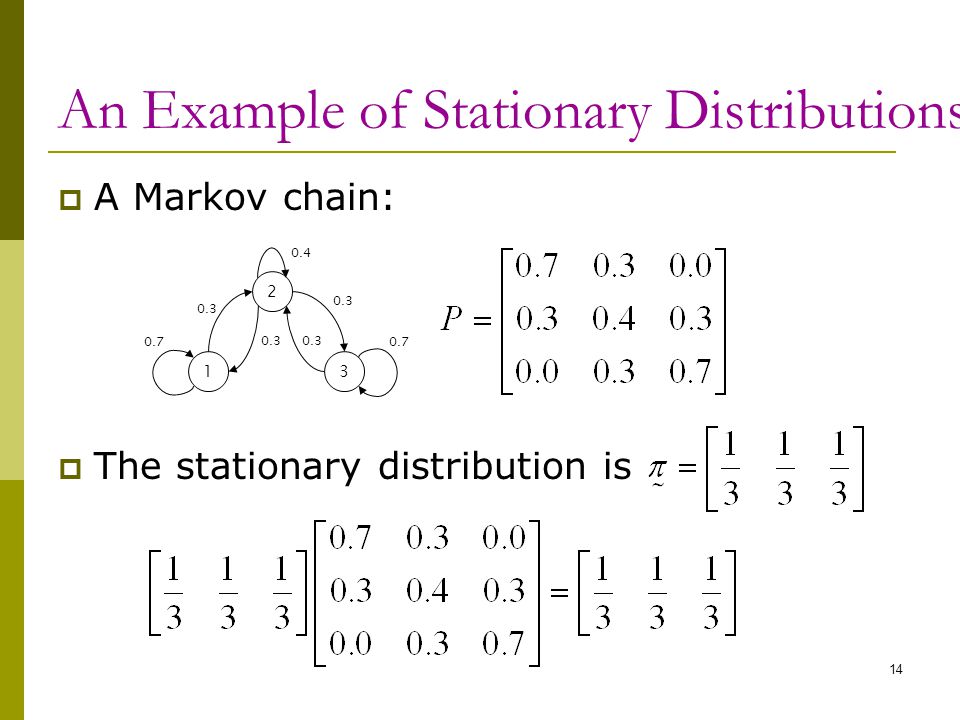

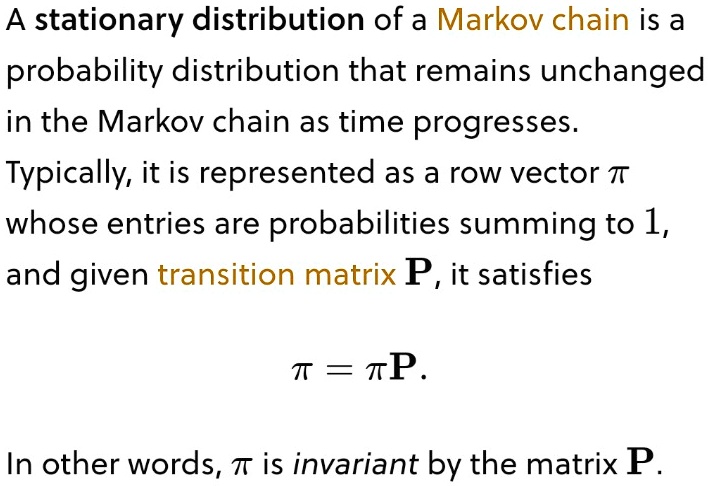

1 Part III Markov Chains & Queueing Systems 10.Discrete-Time Markov Chains 11.Stationary Distributions & Limiting Probabilities 12.State Classification. - ppt download

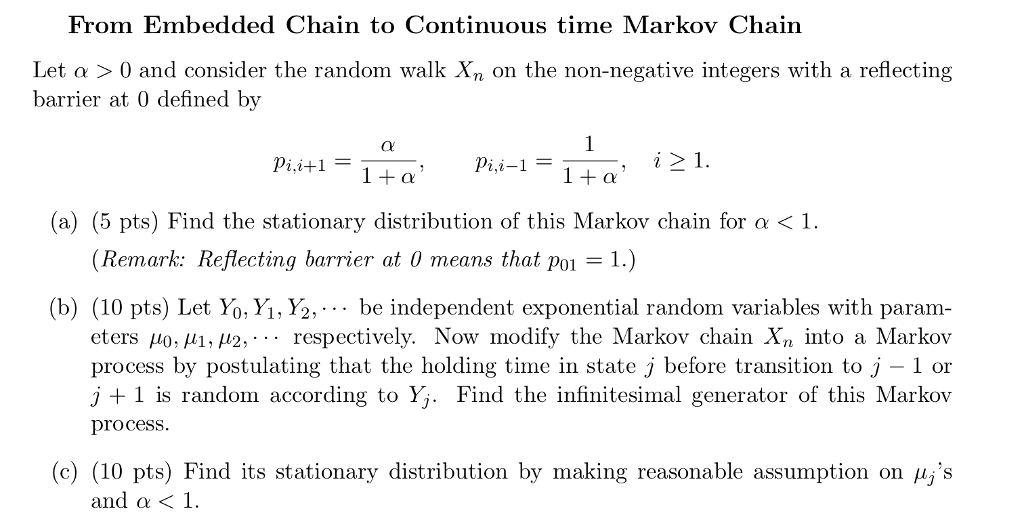

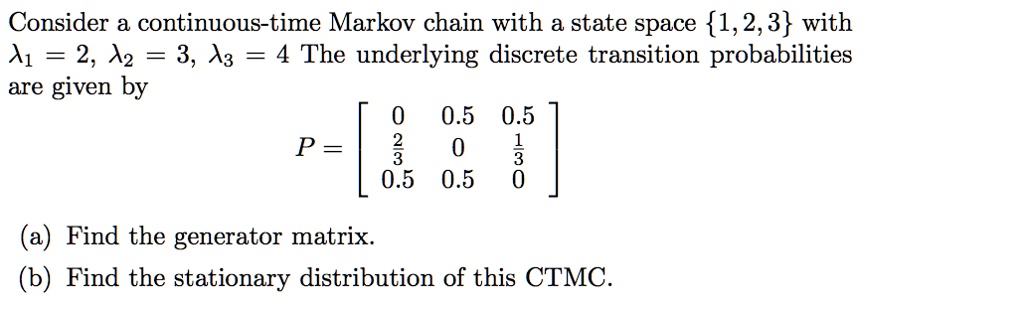

SOLVED: Consider continuous-time Markov chain with a state space 1,2,3 with A1 = 2, A2 = 3, A3 = 4 The underlying discrete transition probabilities are given by 0 0.5 0.5 P =

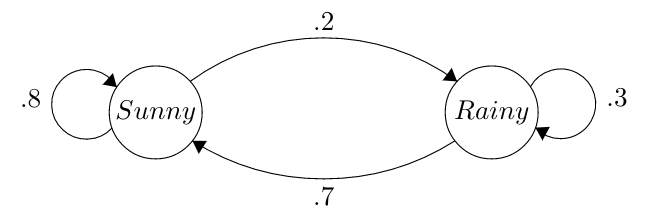

Please can someone help me to understand stationary distributions of Markov Chains? - Mathematics Stack Exchange

![Solved Models 1. [20 points] Continuous-Time Markov Chain. | Chegg.com Solved Models 1. [20 points] Continuous-Time Markov Chain. | Chegg.com](https://media.cheggcdn.com/media%2F87f%2F87f632b6-8af1-42a6-937e-9c70fd2dbfdc%2Fimage)

![CS 70] Markov Chains – Finding Stationary Distributions - YouTube CS 70] Markov Chains – Finding Stationary Distributions - YouTube](https://i.ytimg.com/vi/YIHSJR2iJrw/maxresdefault.jpg)